Contrary to the popular fear that your phone’s microphone is always listening, the reality is far more sophisticated and subtle. Predictive advertising doesn’t read your mind; it analyzes your ‘digital twin’—a statistical model built from thousands of your online and offline behaviors. By finding what people statistically identical to you have already purchased or desired, algorithms can predict your next move with unnerving accuracy, turning a feeling of personal violation into a predictable, profitable transaction.

Have you ever had a conversation about a niche product, only to see an ad for it moments later on your social media feed? The immediate suspicion for many is unsettling: “My phone is listening to me.” This common fear, while understandable, often misses the real story. The truth isn’t about clandestine eavesdropping; it’s about a far more powerful and pervasive system of observation and inference.

Advertisers don’t need to listen to your voice because they are meticulously watching your actions. Every click, every pause, every purchase, and even the way you move your mouse creates a stream of data. This data is used to build a sophisticated profile, a ‘digital twin’ or statistical doppelgänger, that doesn’t just reflect who you are but predicts who you will become as a consumer. The unnerving accuracy of these ads comes not from spying on a single person, but from analyzing the aggregated behavior of millions.

But if the key isn’t a hot mic, what is it? The real mechanism lies in behavioral inference and probabilistic matching—techniques that connect your offline life (like credit card purchases) to your online identity. This article will demystify this process. We will explore how your digital twin is constructed, examine the biases and risks it creates, and most importantly, outline the concrete steps you can take to confuse these systems and regain a sense of control over your digital self.

To fully grasp how this invisible architecture of prediction operates, this guide breaks down its core components. From the data you unknowingly provide to the ways you can strategically reclaim your privacy, the following sections will provide a clear roadmap into the world of predictive analytics.

Summary: How Predictive Analytics Decodes Your Online Behavior

- Why Does Your Credit Card History Influence the Ads You See on Social Media?

- How to Confuse Tracking Algorithms to Protect Your Digital Profile?

- Surveys vs. Mouse Movement: Which Data Tells Companies More About You?

- The Pricing Bias: Are You Paying More Because of Your Zip Code?

- How to Curate Your Ad Preferences to See Useful Products Instead of Junk?

- Why Do Cameras No Longer Need Your Face to Identify You?

- App vs. Browser: Why You Spend 25% More on a Small Screen?

- How Much Data Do Smart Streetlights Collect About Your Movements?

Why Does Your Credit Card History Influence the Ads You See on Social Media?

The link between your offline spending and online ads is not magic; it’s a data science technique called probabilistic matching. Social media platforms don’t get a direct feed from your bank, but they can purchase anonymized transaction data from third-party data brokers. This data includes what you bought, where, and when, but not your name. The platform then matches this data to user profiles using statistical probabilities. If a user’s location, age, and demographic data on the platform closely match the profile of an offline purchase, the system makes an educated guess and links the two. Your credit card history becomes a powerful “seed” for building your digital twin.

Once this connection is made, you are placed into “lookalike audiences.” If you frequently buy high-end organic groceries, you will be grouped with other users who do the same. Advertisers can then target this entire group, assuming that if your peers bought a certain product, you are highly likely to be interested as well. It’s a powerful tool for marketers, especially as privacy measures tighten. Even with recent privacy updates from Apple that restrict direct tracking, lookalike audiences remain a cornerstone of targeted advertising.

Case Study: LiveRamp’s Lookalike Modeling Success

The effectiveness of this method is well-documented. For instance, the advertising technology company Choozle partnered with data onboarding service LiveRamp to refine its lookalike modeling. By using high-quality offline data to build its models, Choozle generated 50% more leads compared to a competing provider. Furthermore, they achieved an impressive 80% lead qualification rate, demonstrating just how accurately these models can predict consumer intent based on statistical similarities.

As data scientist Ravish Yadav notes, this process is continuously refined: “Through analysis of demographics, browsing behavior and interactions, machine learning algorithms will fine-tune targeting approaches in real-time, resulting in improved programmatic ad purchases.” This real-time optimization means your digital twin is constantly learning and evolving based on both your online signals and inferred offline behavior, making the ads you see increasingly specific.

How to Confuse Tracking Algorithms to Protect Your Digital Profile?

If predictive algorithms thrive on clean, consistent data, the most effective defense is to introduce noise. This strategy, known as signal contamination or data poisoning, involves deliberately feeding algorithms false or random information to make your digital twin less accurate and therefore less valuable. Instead of trying to be invisible, you become illegible. The goal is to create so much conflicting data that algorithms can no longer confidently place you in a specific demographic or interest group. This disrupts their ability to serve hyper-targeted ads or apply discriminatory pricing.

This can be achieved through various methods, from simple changes in browsing habits to using specialized tools. For example, using browser containers or separate profiles for different online activities (work, shopping, personal) prevents companies from building a single, unified profile of your life. Every isolated session contributes to a fragmented identity that is harder to monetize. By consciously “liking” random hobbies on social media or searching for topics far outside your actual interests, you actively muddy the waters of your behavioral data.

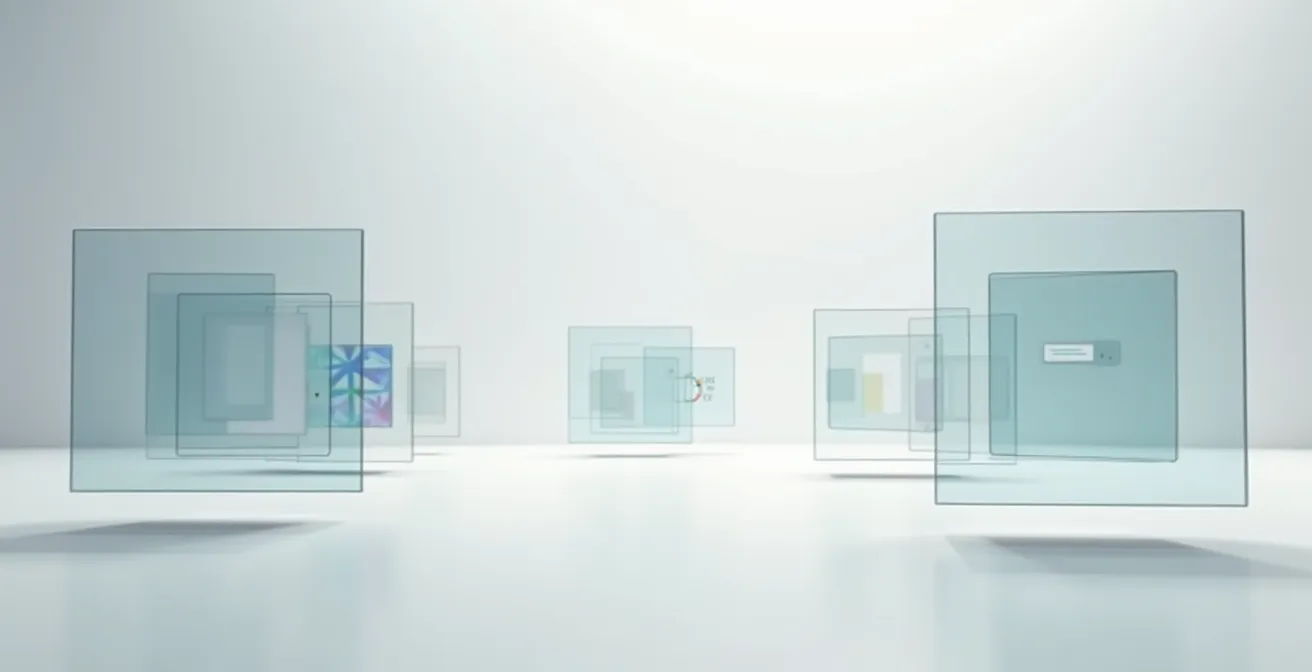

As the image above conceptualizes, each container acts as a separate identity, breaking the chain of tracking from one part of your digital life to another. This act of digital fragmentation is a powerful, proactive step towards privacy. It moves beyond passive measures like clearing cookies and empowers you to actively shape how you are perceived by automated systems. The following checklist provides a practical guide to implementing these data obfuscation techniques.

Action Plan: Obfuscate Your Digital Identity

- Use browser containers to separate work, personal shopping, and social media activities into isolated profiles.

- Create a “honeypot” email address specifically for low-trust sign-ups and newsletters to divert tracking.

- Actively feed false signals by liking random hobbies and interests on social platforms.

- Employ tools like AdNauseam that automatically click every ad to poison your behavioral data.

- Add stochastic behaviors by intentionally randomizing your browsing patterns and timing.

Surveys vs. Mouse Movement: Which Data Tells Companies More About You?

Companies have long used surveys and feedback forms to understand their customers. However, this “declared data”—what you choose to tell them—is often less reliable than “observed data.” People may misremember, aspire to be seen a certain way, or simply not know their true preferences. This is why behavioral inference, the science of deducing intent from actions, is so valuable. And one of the most revealing actions you take online is how you move your mouse.

Your cursor is not just a pointer; it’s an extension of your thought process. Hesitations, rapid movements, circular motions, and even the speed at which you travel from one part of the screen to another are all valuable signals. A user who confidently moves their mouse directly to the “purchase” button behaves differently from one who hovers over multiple options, returns to the product description, and hesitates over the price. These micro-behaviors reveal confidence, confusion, frustration, and intent far more honestly than a five-star rating.

This heat map illustrates how patterns of movement can be visualized. The bright spots indicate hesitation, while the trails show the journey of consideration. Researchers have even successfully used these patterns to identify user emotions. In one study, subjects were given a deliberately slow interface to induce frustration. By analyzing only their clicking patterns, the system could identify frustrated users with significant accuracy. This shows that your emotional state can be inferred without you ever typing a word, making mouse dynamics a powerful tool for data-driven empathy at scale, but also a deeply invasive form of surveillance.

The Pricing Bias: Are You Paying More Because of Your Zip Code?

The same predictive analytics that powers targeted ads also fuels a more controversial practice: dynamic pricing. This is the real-time adjustment of prices based on a customer’s perceived ability and willingness to pay. While airlines have used this for decades, machine learning has made it possible for nearly any online retailer to implement it at an individual level. Your location, device type, browsing history, and even your laptop’s battery level can be used to calculate a personalized price for the exact same product.

This practice moves from commercially savvy to ethically questionable when it reinforces existing societal biases. An algorithm may not be programmed to be racist or classist, but if it learns from data that reflects historical inequities, it will replicate them. For example, if it learns that users in a certain zip code are more likely to pay a higher price, it will charge them more, regardless of their individual financial situations. This creates a feedback loop of algorithmic discrimination.

A stark example of this was uncovered by a ProPublica investigation into The Princeton Review’s SAT prep course prices. The investigation found that Asians were nearly twice as likely as non-Asians to be shown a higher price, a bias that stemmed from the correlation between zip codes with high Asian populations and higher historical purchase prices. As New York State Assemblymember Emérita Torres rightly argues, “Your price shouldn’t be determined by your ZIP code, race, or income level.”

The factors that influence these prices extend far beyond geography. The table below outlines just a few of the signals that can be used to adjust what you pay, often without your knowledge or consent.

| Factor | How It’s Used | Price Impact |

|---|---|---|

| Device Type | New Apple vs Old Android | Premium device users charged more |

| Time of Day | Night travel searches | Higher prices after business hours |

| Battery Level | Low battery detection | Urgency pricing applied |

| Shopping Patterns | Browsing history analysis | Inferred income affects pricing |

How to Curate Your Ad Preferences to See Useful Products Instead of Junk?

While confusing algorithms is a valid defensive strategy, an alternative approach is to take control and actively teach them what you want. Instead of a barrage of irrelevant ads, you can curate your advertising experience to be genuinely useful. This involves moving from a passive data subject to an active profile curator. Platforms like Google and Facebook provide dashboards where you can see the interests and demographic categories they have assigned to you. Taking the time to prune this list is a powerful act of digital hygiene.

The process is straightforward: access your ad preference settings and begin upvoting or adding interests that align with your actual values and needs, while downvoting or removing the ones that are incorrect or irrelevant. If you’re a passionate gardener who is being shown ads for video games, you can explicitly tell the algorithm it has made a mistake. By doing so, you are providing high-quality, “first-party” data that is far more valuable to the system than its own inferences. This improves the relevance of the ads you see and makes your digital twin a more accurate, and ultimately more helpful, reflection of your interests.

You can also be strategic with your browsing signals. Before a major purchase, such as a new camera, spend time on high-quality review sites and the homepages of brands you respect. These high-intent signals tell algorithms that you are a discerning customer conducting research. This can lead to more relevant ads for accessories or competing products, rather than a flood of low-quality junk. An Australian travel company demonstrated this principle by using its own customer data as a “seed” audience, which allowed them to build a lookalike strategy that effectively reached a highly relevant and new audience, proving that better data in leads to better results out.

Why Do Cameras No Longer Need Your Face to Identify You?

Facial recognition is a well-known form of biometric identification, but a new, more subtle category is emerging: behavioral biometrics. This technology identifies you not by your static physical features, but by your unique patterns of movement and interaction. As defined in one academic paper, it is “the measurement and analysis of human-specific behavioral traits based on human movement or their interaction with computer parts.” This means that cameras can now identify individuals based on their gait (the way they walk), their posture, or their gestures, even if their face is obscured.

Unlike physical biometrics like fingerprints or faces, which can be altered by injury or environment, these behavioral patterns are remarkably stable over time. You develop a consistent rhythm and style of movement that acts as a reliable signature. Advanced surveillance systems in public spaces can analyze video feeds to track individuals through a crowd by locking onto their unique gait, even as they move in and out of view. This form of re-identification is far more difficult to evade than traditional facial recognition, as you can’t easily change the way you walk.

This technology represents a significant shift in surveillance capabilities. It moves beyond identifying *who* you are to analyzing *how* you are. According to research on behavioral biometrics, these patterns are deeply ingrained and difficult to forge, making them a robust identifier. For security applications, this offers a way to spot an authorized person in a restricted area without needing a clear view of their face. However, for privacy, the implications are chilling: a world where you can be tracked and identified by the very way you move through it.

App vs. Browser: Why You Spend 25% More on a Small Screen?

The choice between shopping on a company’s mobile website or through its dedicated app might seem trivial, but it has a significant impact on your spending. The app is not just a convenient shortcut; it is a controlled environment designed to maximize revenue. Within an app, the company controls the entire user experience. There are no competitor ads, no distracting browser tabs, and no easy way to price-compare. You are in their “walled garden,” where they can deploy personalized pricing and persuasive design with maximum effect.

Apps also have deeper access to your phone’s data and sensors than a browser does. They can use push notifications to create urgency, access your location data to offer localized deals, and generally build a much richer profile of your habits. This wealth of data allows for hyper-personalized experiences and pricing. For example, a large-scale experiment by ZipRecruiter used a machine learning algorithm to offer individualized prices to thousands of new customers based on features like their firm size and job type, a level of personalization difficult to achieve in a browser.

The financial incentive for companies to push users towards their apps is substantial. A field experiment published as a National Bureau of Economic Research working paper found that personalized pricing can improve a company’s profits by as much as 19 percent compared to uniform pricing. When you are in an app—a frictionless, personalized, and distraction-free environment—you are more susceptible to impulse buys and less likely to question the price you are being offered. The smaller screen and focused interface reduce cognitive load, making it easier to click “buy” without overthinking.

Key Takeaways

- Predictive analytics relies on ‘digital twins’—statistical models of your behavior—not on listening to your conversations.

- What you do (behavioral data like mouse movements) is far more revealing to algorithms than what you say (declared data like surveys).

- You can regain agency by either contaminating your data profile with false signals or by actively curating it to reflect your true interests.

How Much Data Do Smart Streetlights Collect About Your Movements?

The architecture of predictive analytics is moving from our screens into our streets. “Smart city” infrastructure, such as intelligent streetlights equipped with sensors and cameras, represents the next frontier of data collection. These devices can do much more than just provide light; they can monitor traffic flow, detect available parking spaces, and measure air quality. They can also capture vast amounts of data about the public’s movements, transforming physical space into a trackable environment.

These systems can collect data on pedestrian and vehicle density, routes, and dwell times. While often framed as a tool for urban planning and public safety, this infrastructure creates a permanent record of public life. The same behavioral biometric techniques used to identify people by their gait can be deployed across a city-wide network of cameras, enabling persistent tracking of individuals without ever needing to see their face. This raw data can be used for “surveillance pricing,” where services like parking or congestion charges are dynamically adjusted based on real-time demand patterns.

This expansion of surveillance into the physical world has not gone unnoticed by regulators. A report by the Future of Privacy Forum highlights a growing number of legislative efforts aimed at regulating algorithmic and data-driven pricing, especially in critical sectors. In a significant move, the U.S. Federal Trade Commission (FTC) voted in July 2024 to launch a formal study into the surveillance pricing ecosystem, signaling a new level of scrutiny on how data collected in public spaces is being monetized. The line between a helpful smart city and a pervasive surveillance state is a thin one, defined by transparency, regulation, and public consent.

To truly reclaim your digital autonomy, the next logical step is to audit your own data footprint and begin implementing these defensive and curative strategies. Start by reviewing your ad preferences on a single platform and take control of your digital twin today.